HyperPod checkpointless training features

See the following pages to learn about the training features in checkpointless training.

Topics

Amazon SageMaker HyperPod checkpointless training repositories

HyperPod checkpointless training

Checkpointless training is enabled via three optimization tracks that run in concert:

-

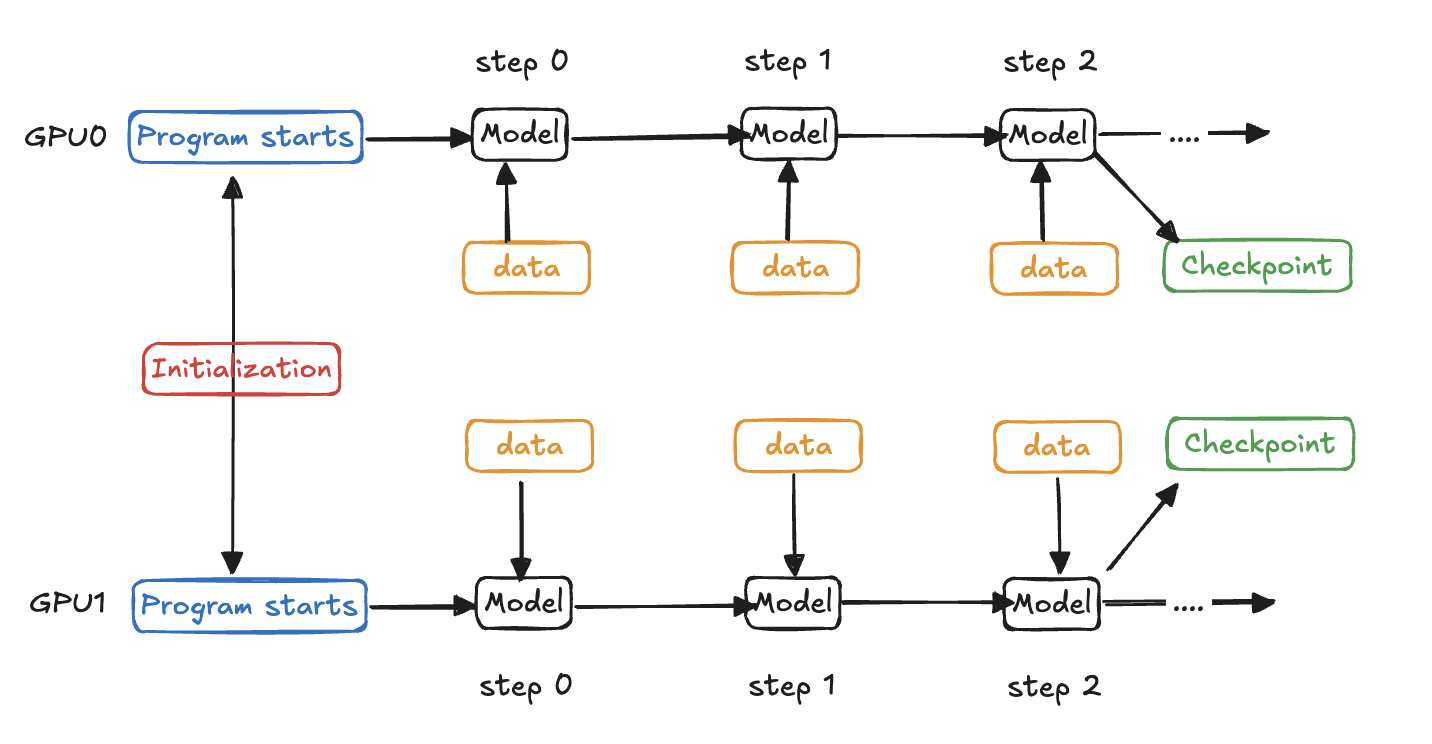

Communication initilization improvements (NCCL and Gloo) - Eliminate communication bottlenecks by decentralizing rank peer and ring information (red box below).

-

Data loading optimizations - Reduce the time required to serve the first batch of data during restart operations (orange boxes below).

-

Program restart overhead reduction - Minimize restart costs and enable checkpointless replenishment through process recovery on healthy nodes (blue and green boxes below).